A technical deep dive into how PGDUMP works

Learn how PGDUMP works under the covers

March 11th, 2024

Neon DB is a fast-growing database that offer serverless Postgres. It's open source and gaining a lot of traction with individual developers working on side projects as well as businesses running mission critical applications.

In this guide, we're going to walk through how you can seed your Neon database with synthetic data for testing and rapid development using Neosync. Neosync is an open source synthetic data orchestration company that can create anonymized or synthetic data and sync it across all of your neon environments for better security, privacy and development.

Let's jump in.

We're going to need a Neon account and a Neosync account. If you don't already have those, we can get those here:

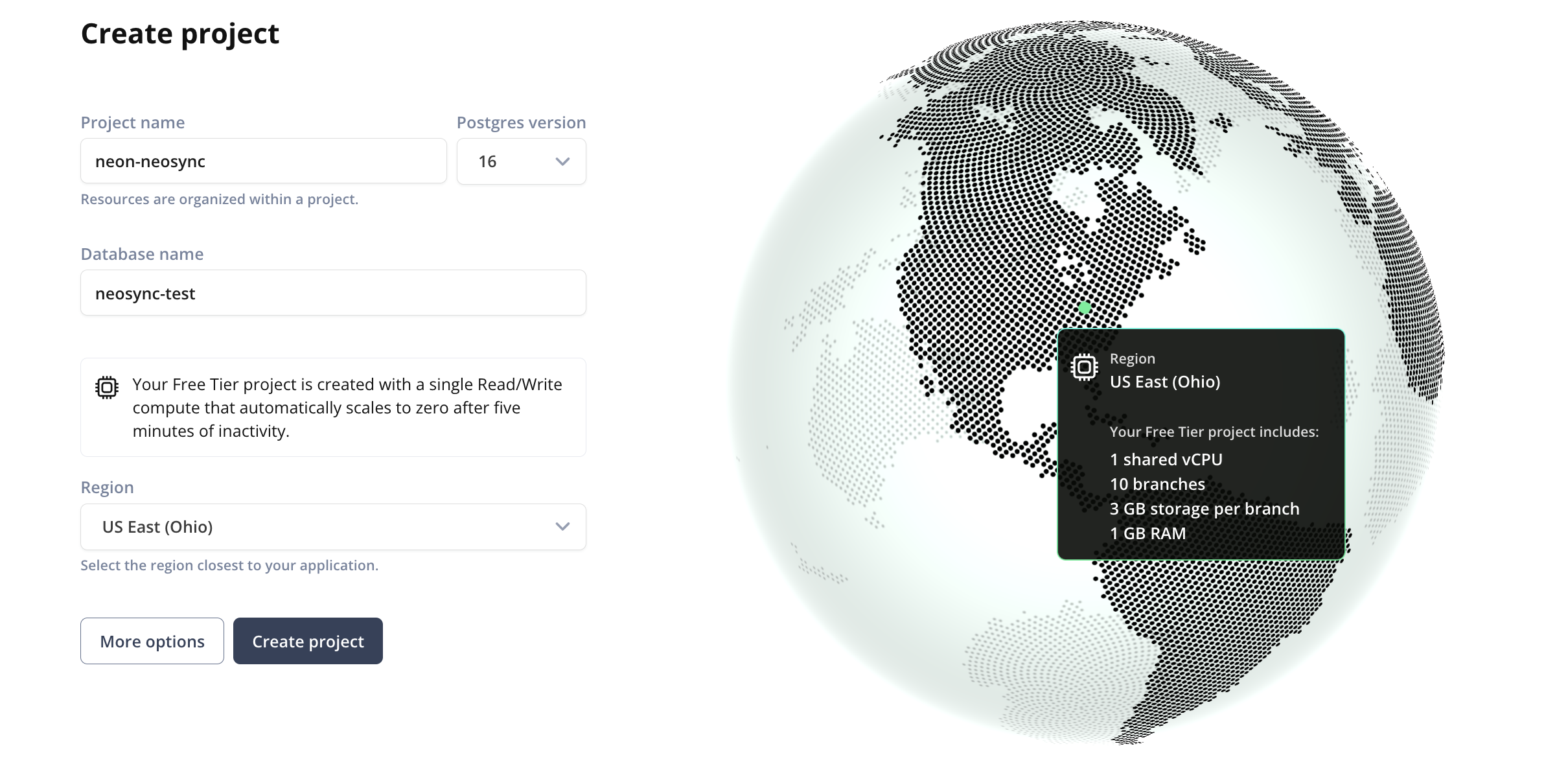

Now that we have our accounts, we can get this ball rolling. First, let's log into Neon. If you already have a Neon account then you can either create a new project or a new database. If you don't have a Neon account then give your project a name, your database a name and select a region like below:

Now we can click on Database on the left hand side in order to select the database that we created. If you already have a database set up then you can select the database that you want to act as your source database.

Next, we'll need to define our database schema. For this demo, we'll create one table but you can create as many tables as you need to.

Let's navigate to SQL editor and create our table. Here is the SQL script I ran to create our table in the public schema. If you have the uuid() extension installed you can also set the id column to auto-generate those for you or you can use Neosync to generate them. Let's create our table.

CREATE TABLE public.users (

id UUID PRIMARY KEY,

first_name VARCHAR(255) NOT NULL,

last_name VARCHAR(255) NOT NULL,

email VARCHAR(255) NOT NULL,

age INTEGER NOT NULL

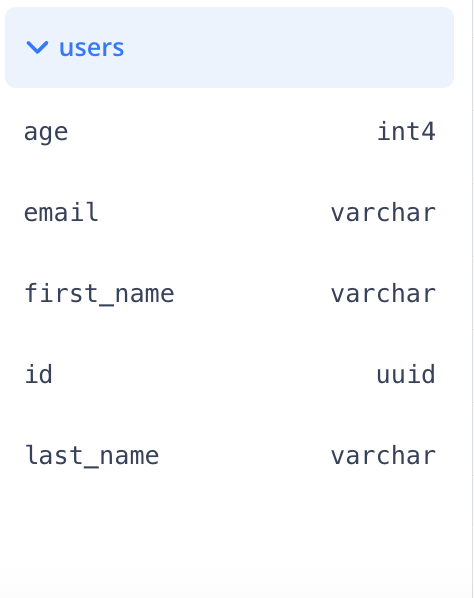

);We can do a quick sanity check by going to Tables and seeing that our table was successfully created.

Nice! Okay, last step for Neon. Let's get our connection string so we can connect to Neon from Neosync. We can find our connection string by going to Dashboard and then under the Connection String header, you can find your connection string. Hold onto this for a minute while we get Neosync set up.

Now that we're in Neosync, we'll want to first create a connection to our Neon database and then create a job to generate data. Let's get started.

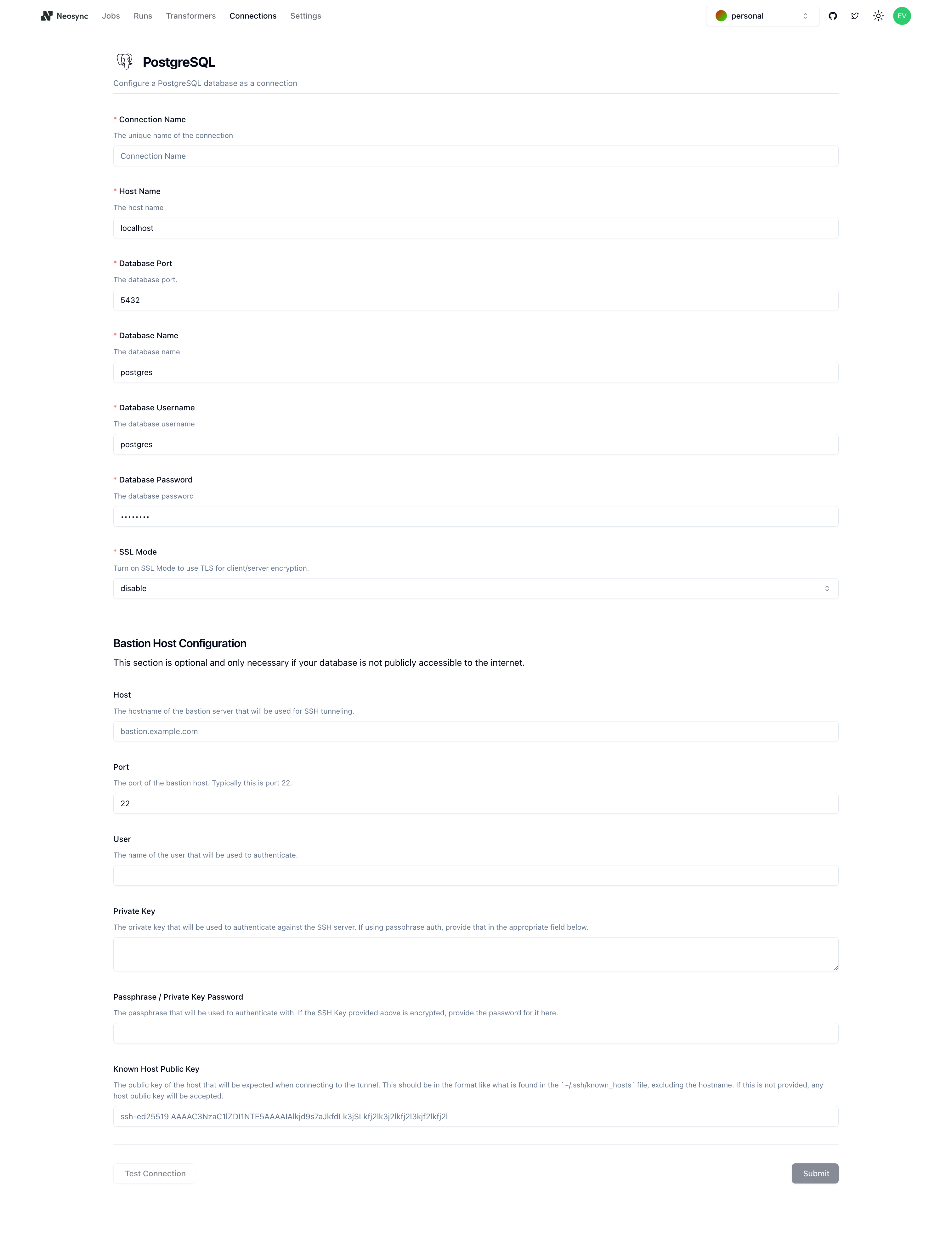

Navigate over to Neosync and login. Once you're logged in, go to to Connections -> New Connection then click on Postgres.

You should see the above form. Since our Neon database is public we can ignore the bottom part about configuring a Bastion Host. Let's go ahead and start to fill out our Neon connection string in this form. Here's a handy guide of how to break up the connection string and map it to the fields in the form.

| Component | Value | Description |

|---|---|---|

| Protocol | postgresql:// | Indicates a connection to a PostgreSQL database. |

| Username | evis | The username for authenticating the connection. |

| Password | ************ | The password for authentication (hidden for security). |

| Host | ep-late-cherry-a5k4dfkr.us-east-2.aws.neon.tech | The hostname or IP address of the database server. |

| Name | neosync-test | The specific database name to connect to. |

| SSL Mode | sslmode=require | Specifies that SSL encryption is required for the connection. |

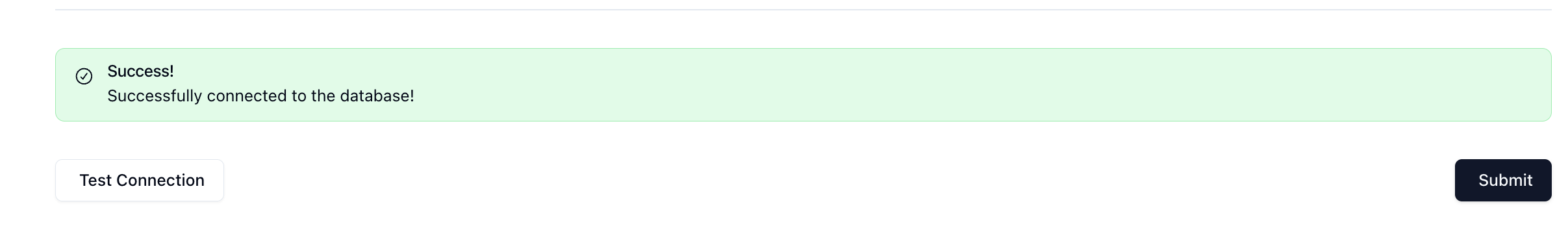

Once you've completed filling out the form, you can click on Test Connection to test that you're connected. You should see this if it passes:

Let's click Submit and move onto the last part.

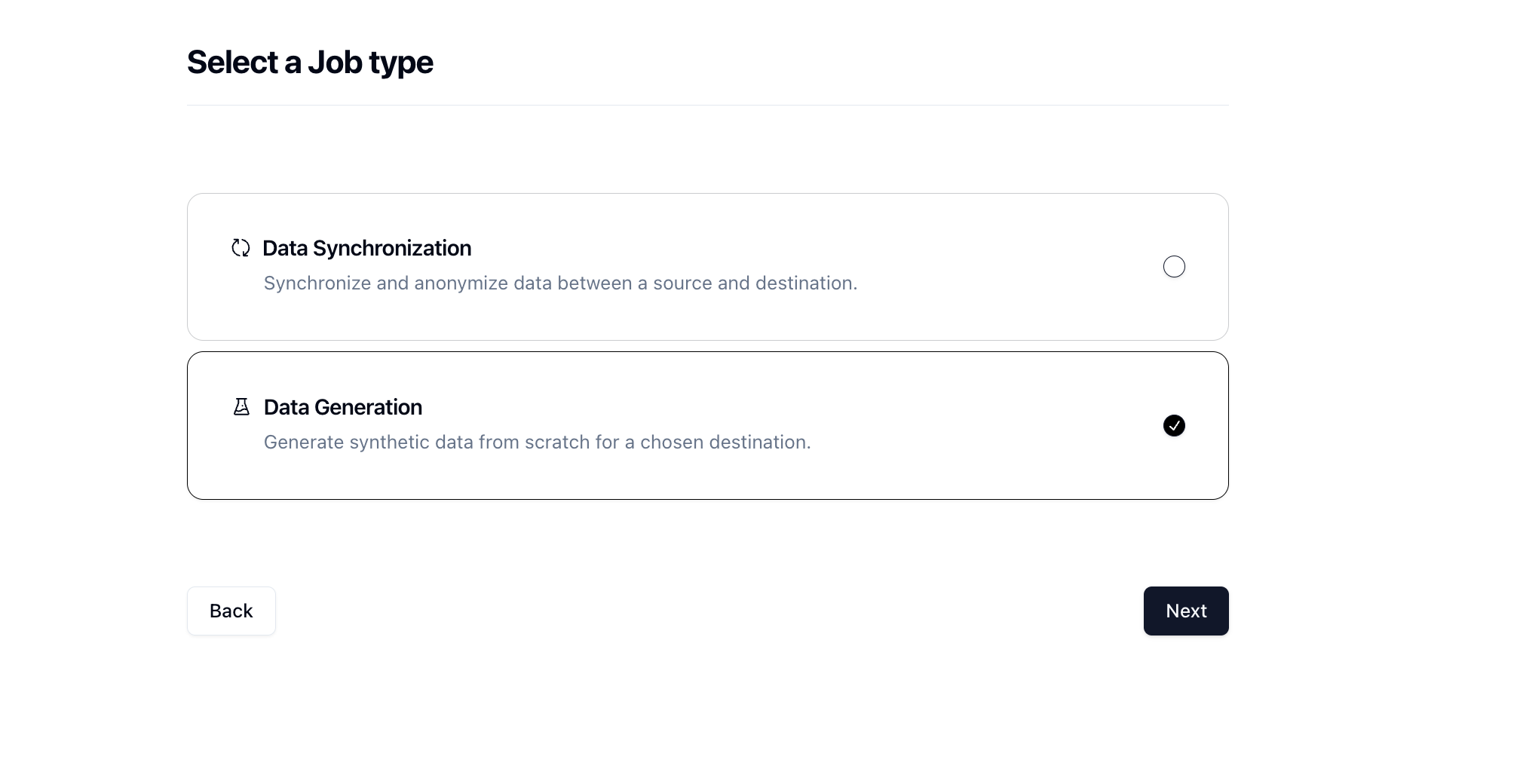

In order to generate data, we need to create a Job in Neosync. Let's click on Job and then click on New Job. We're now presented with two options:

Since we're seeding a table from scratch, we can select the Data Generation job and click Next.

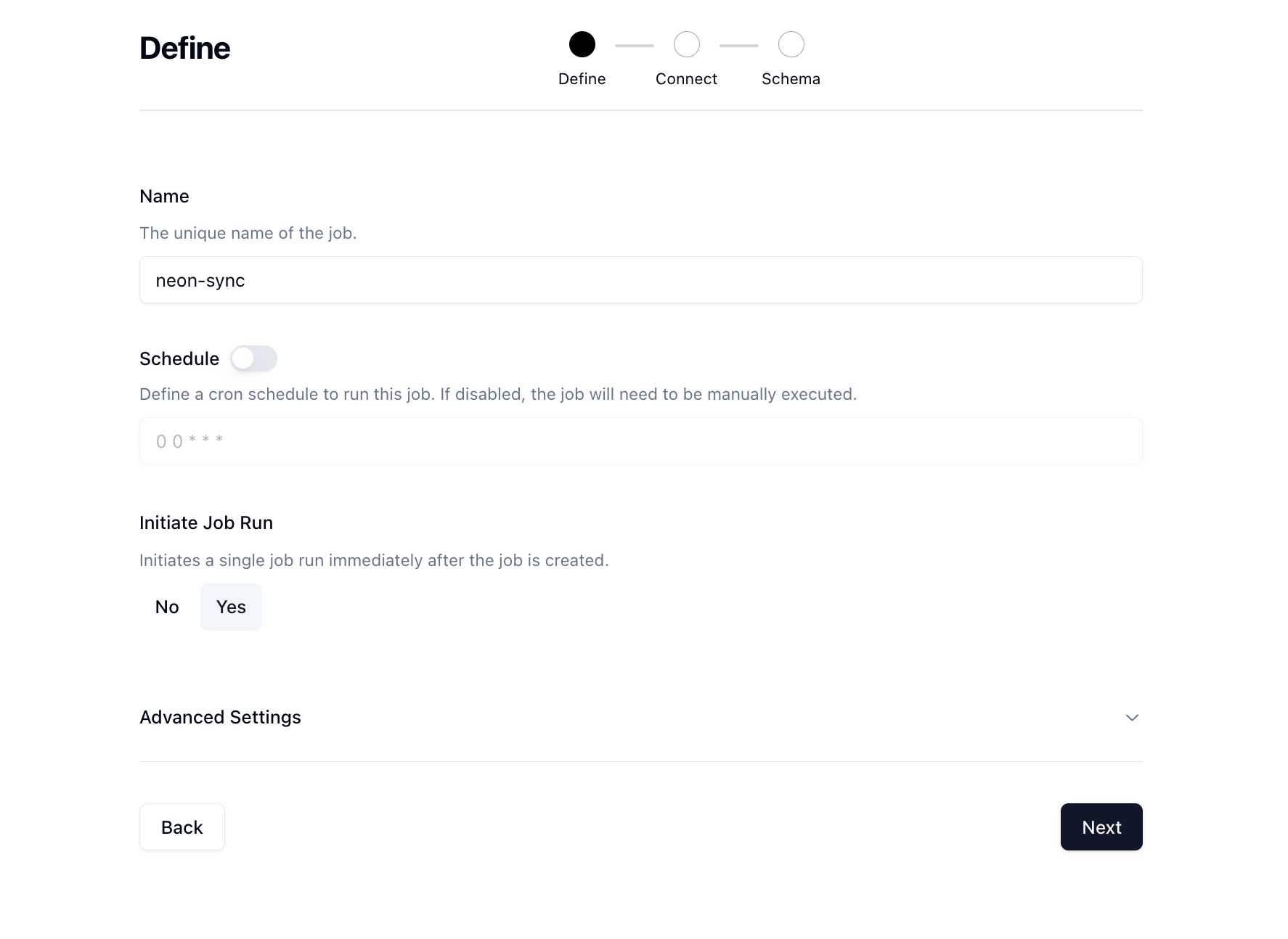

Let's give our job a name and then set Initiate Job Run to Yes. We can leave the schedule and advanced options alone for now.

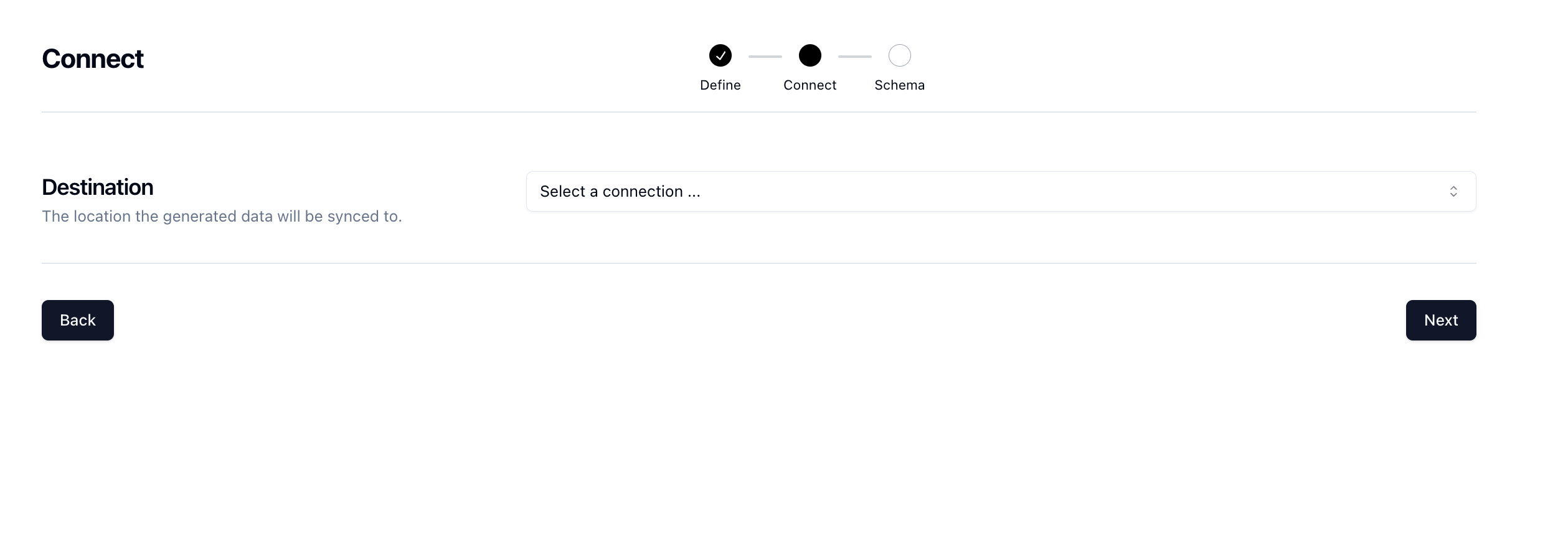

Click Next to move onto the Connect page. Here we want to select the connection we previously connected from the dropdown.

There are some other options here that can be useful but we'll skip these for now and click Next.

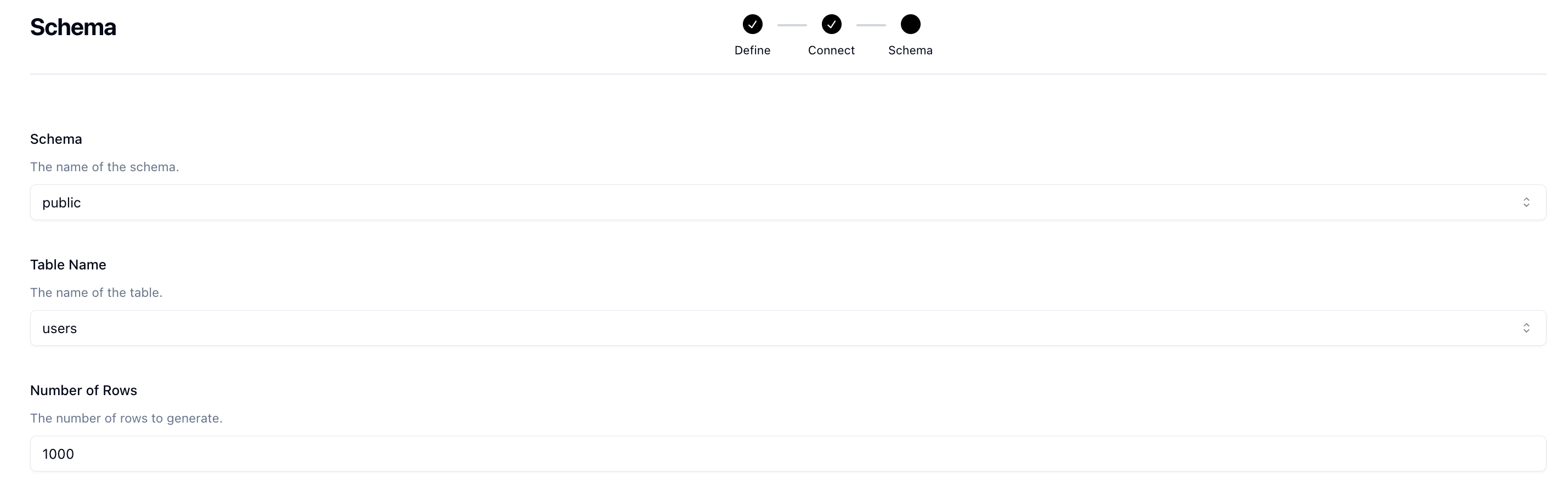

Now for the fun part. First select your schema. Mine is the public schema but you may have another one. Next select the table where you want to generate synthetic data. So I'm going to select the users table.

Next, decide how many rows you want to create. For this run, I'll do 1000 rows.

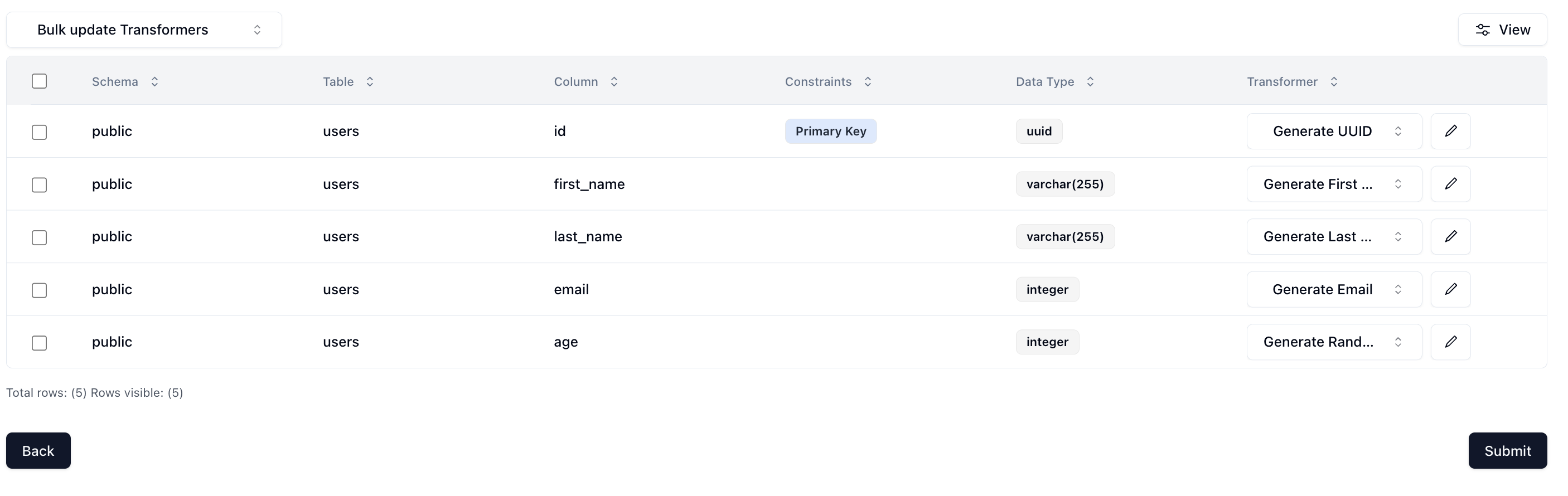

Lastly, we need to determine what kind of synthetic data we want to create and map that to our schema. Neosync has Transformers which are ways of creating synthetic data. Click on the Transformer and then select the right Transformer that maps to the right column. Here is what I have set up for the users table.

For the age column, I used the Generate Random Int64 Transformer to randomly generate ages between 18 and 40. You can configure that by clicking on the edit icon next to the transformer and setting your min and max.

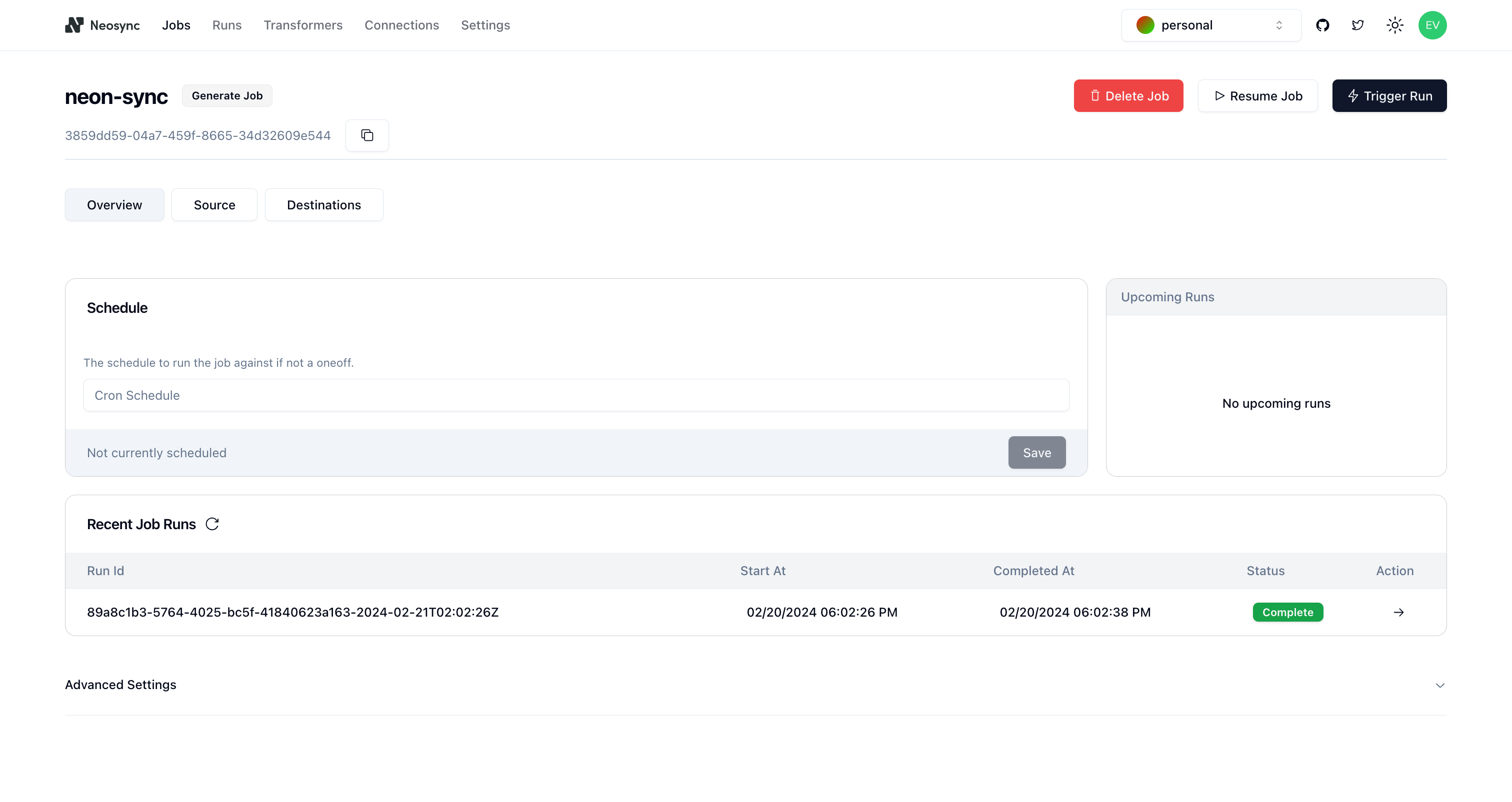

Now that we've configured everything, we can click on Next and create the job! We'll get routed to the Job page and see something like this:

You can see that our job ran successfully and in just 12 seconds!

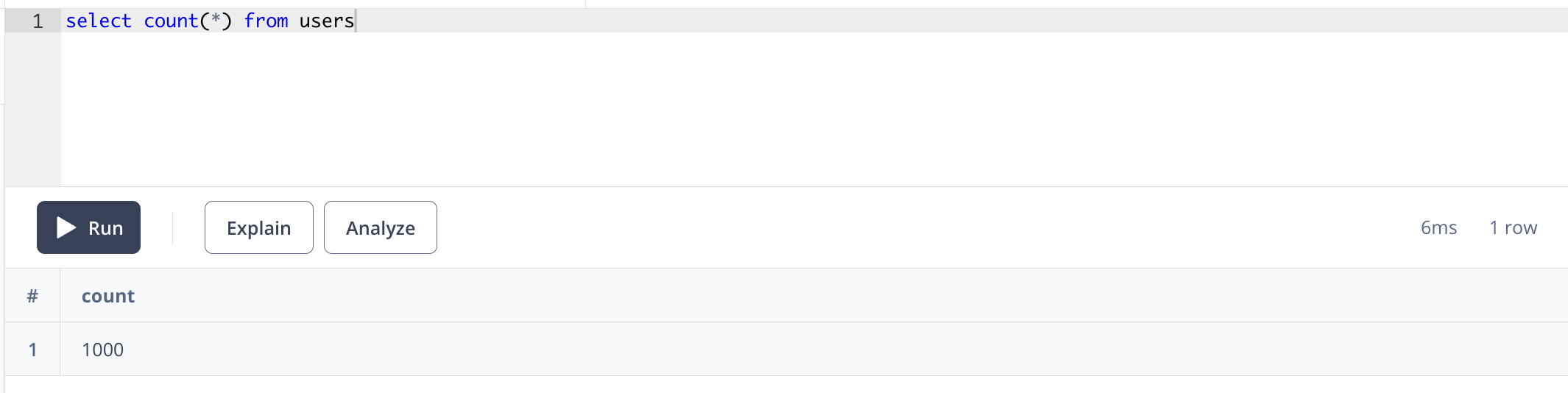

Now we can head back over to Neon and check on our data. First let's check the count and make sure we generated 1000 rows.

SELECT COUNT(*) FROM users;

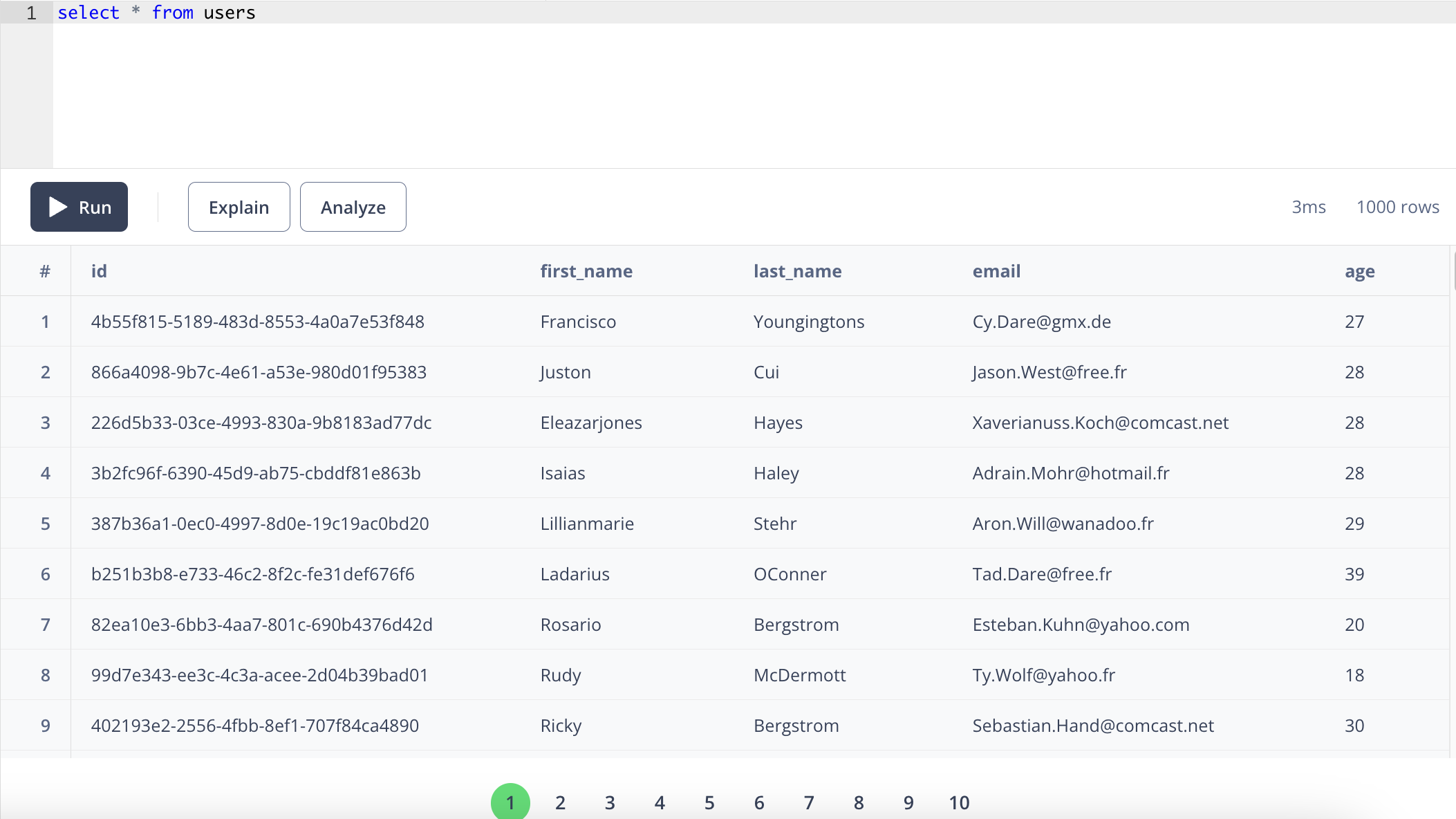

Next, let's check the data:

SELECT * FROM users;

Looking pretty good! We have seeded our Neon database with 1000 rows of completely synthetic data and it only took 12 seconds.

In this guide, we walked through how to seed your Neon database with 1000 rows of synthetic data using Neosync. This is just a small test and you can expand this to generate tens of thousands or more rows of data across any relational database. Neosync handles the referential integrity. This is particularly helpful if you're working on a new application and don't have data yet or want to augment your existing database with more data for performance testing.

Learn how PGDUMP works under the covers

March 11th, 2024

A walkthrough tutorial on how to anonymize sensitive data in Supabase

March 6th, 2024